As I review large JavaScript implementations within our firm, I see the basic aspects missing, for various reasons. Essentially if you are running a JS project that is trying to do an SPA implementation, please make sure you read these twenty things. You might not need all, if the application is a very small one. Many of must haves apply for non-JS projects as well.

NOTE: I have started linking various related articles via hyperlinks into this article. Hope it is of use to you.

MUST HAVES

1. Functional Document

Each program being executed, needs to have a clear functional requirement document that explains the use cases; broken up by functional components. Lots of time, UX design is done with very little understanding and that becomes the source of truth for developers to write code. It will have zero to very small amount of validation requirements or data requirements. Just having the look and feel and page flow is not enough for a UI to be developed. Not sure, why this is hard to understand, but with crappy timeline plans, I have seen more than enough project managers ask the UI team to start developing and asking them to change, as the requirements become clear.

Functional requirements have to be clear and complete – for the UI developers to plan the components, services. Agile does not mean that you don’t need to have any requirement. One of the great misconception on Agile is to think, Agile = No need to have requirements detailed out. Agile is to enable the team to adapt to changing needs.

2. Architecture: SAD

System Architecture Document is a necessity – that should have a 4+1 view of the system being developed. Such a document should address the security aspects (cross cutting concerns) of the JS implementation. JSP/ASP (server side rendering) approach to security will not work for a JS SPA solution. For example, user profile information in a browser is the last thing we would like to see (meaning, it is a NO NO to have a profile data in the browser).

With the controllers and view models all moving into the browser, it is necessary to have a layered architecture thought through for the JS code as well.

- See 4 + 1 view for details

- See ‘Patterns of Enterprise Application Architecture’ for details on layered architecture; also this wiki page provides some brief on layered architecture

3. System Currency Document & Standards

JS platforms are evolving in a rapid pace. Most of them are open source and free to use, for enterprise as well. Not all of their releases are backward compatible. For example, Angular 2.0 (upcoming one) and EmberJS in the past are releases that consider(ed) features over staying backward compatible. With such a changing environment, it should be a mandate for an Architect in a large JS program to establish for a formal or in-formal Architecture Review Board, that will approve and finalize the list of UI libraries. All such finalized UI frameworks and any other platforms, third party products should be maintained with their version numbers.

Any development, SIT, UAT and Production setup should be done against this System Currency. In addition, such a system currency should be used to evaluate the technical debt at a higher level from time to time.

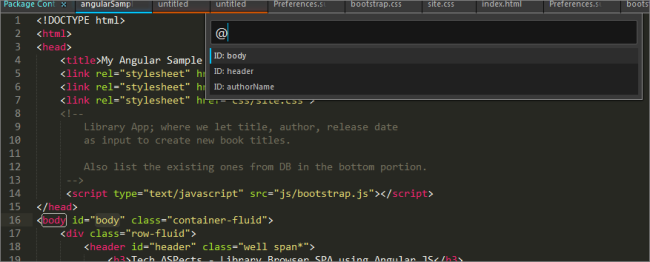

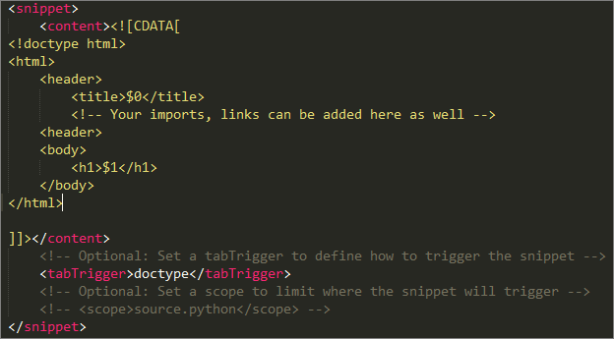

4. Seed Project

JS frameworks are still evolving, unlike the Java/.Net space. If you take Angular JS there are various seed projects available that will setup basic pieces together – like – Authentication, Interceptors, project structure (to hold UI components, build files, test code, build files, module/sub-module loaders, Grunt or Gulp, test directories etc.,) out of the box. If you are starting a JS project and do not have a standard project (code) structure, then you are clearly letting the developers re-invent the wheel.

5. NFRs – from Day 0

Key NFRs for UI include performance, accessibility, offline access, compliance (might be regulatory compliance applicable for the domain), audit needs, longevity expected (like does this app needs to stay working for 10 years before the next upgrade discussion), internationalization, security needs and most of all usability expectation. The requirements document should have outliers (sections in addition to functional details) that briefs about each of these.

Many a times UI development is half-way, when such discussions start; I have been part of such projects and discussions as well. Thinking through and raising these aspects before you start a program is quintessential.

6. Continuous Integration

For some reason, folks think CI is not applicable for JS development. Not sure why. Grunt tasks should be done, so every change done is tested via the Jasmine/Mocha/Protractor tests against the browsers listed in the requirements document. Without a CI, finding browser issues in the last phase of testing, results in patch code and ugly fixes.

7. TDD (at the least write tests)

I am not going to preach TDD here, even though I love TDD and how if done properly, impacts the design of a system. Yes !! TDD enables you to do a good design !!!

Each JS developer should have Karma running, so each change done by him/her results in execution of Jasmine/Mocha tests immediately. There is no other system that could provide immediate feedback.

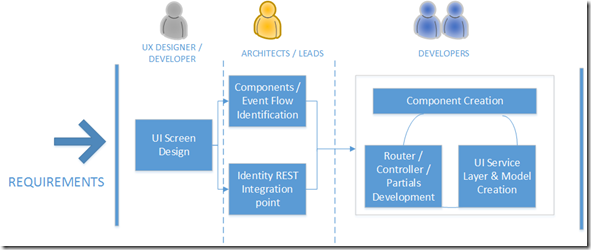

8. Role oriented team structure

Unlike the past, where UI development was one dimensional and the focus was only on developing the views – present day JS MV* based UI are either single or multi page applications, not web pages anymore. Hence there is an increased necessity to breakup and structure the UI developers based on the type of work. This enables them get better and avoid errors on what they do, also at an increased pace. Check out this post which discusses the role based team structure, for a large scale JS Development.

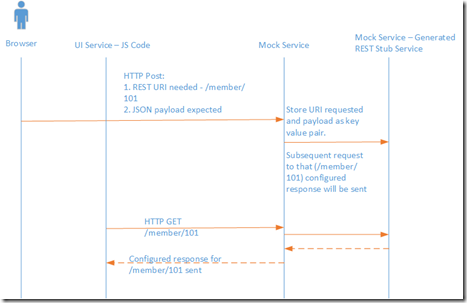

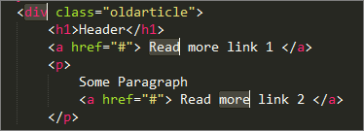

9. One Pagers

Developers to Architects always balk when there is a need to write a large document; at least this is a prevalent issue. One pagers (actually half-a-pager) is brought into to make documentation easier. One pagers can be per screen or use case; essentially this is written by Architects who analyze that UI page by page, and write key aspects on the workings. This becomes the blueprint for the developers to follow.

A sample one pager for a screen should capture the following. Other aspects are across project standards – like coding standards, best practices (like avoiding rootscope etc.,)

One pager should contain the list of

- Reusable UI Components to make use of

- Security recommendations to follow

- Events to handle and quote the samples

- Backend services to integrate

10. Brown bag (OR) Over-Tea-Snack sessions

JS frameworks and related techniques are evolving in rapid pace (actually speaking, big data and even JDKs are moving ahead with tons of changes); hence it is necessary to inculcate the habit of constant learning for the team; else the project will depict a new stack in the design document, but the code will be from the past (like our typical products in the market – same old thing but in a new cover). To avoid this, brown bag sessions should be encouraged.

Brown bag sessions bring in few key things:

- Informal Setting: One it is in an informal setting – so even a discussion where one is munching on their sandwiches is fine. In India this might be hard, so similar to brown bag, an over the tea-snack sessions will provide the setting

- Lighter Preparation – Group Discussion: Unlike a formal presentation, one person can start on a topic and many others can contribute.

Essence of these sessions are to ensure the team captures onto the latest changes that are must-haves. Typical offshore teams are very large, compared to the teams I have worked in the BayArea. In such times, break-up these into multiple sessions. A session should not have more than 10 or 15.

PIT FALLS TO AVOID

Common pit falls I have seen in various Angular JS programs, are written below, in no-particular order.

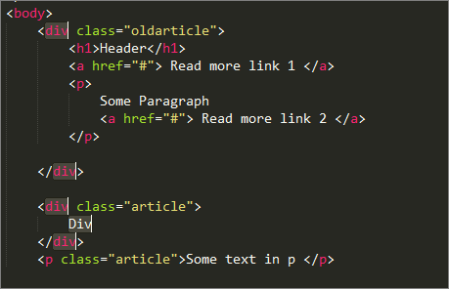

1. Objects, Objects – No hanging attributes

For some reason, developers stop seeing objects and move onto an attribute view, in JS. This might be because of the fact that in JSPs (For example) they were handed over the backend objects and they just need to pick attributes that are needed to be shown. Now that UI developers gets to write the controller, service invocation layer as well. Frameworks like AngularJS has $scope; for developer who do not understand it properly – they see it as cloud to which they can throw anything and the view gets it.

For example, if there are 5 attributes required to be shown in UI from 2 different objects, the attributes to be attached into the scope should be namespaced – like $scope.student.age, $scope.student.name, $scope.student.discipline than just $scope.age, $scope.name, $scope.discipline

$scope.balance = personfromservicecall.savings.balance; //avoid such attribute throwing over the wall (via $scope) $scope.person.savingsacct.balance= personfromservicecall.saccount.balance; // ensure each UI field we handle or attach to the scope belongs to an object

Essentially start having your value models in place. Create value models as the last resort, if the views needs cannot be met by the models from the services.

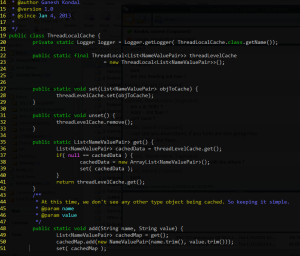

2. Encapsulation

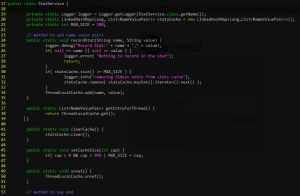

In AngularJS implementations many a times I see the JS promise returned by the REST service call being parsed on success or on error (see async invocation in AngularJS ) in the controllers. As the single responsibility principle in SOLID principles go, the responsibility of the service is to retrieve the object and NOT of the controller. If a Java/.Net developers moves onto JS development, they are more used to the synchronous invocation style; hence they just return a promise to whichever controller calls that service. And every controller tries to parse the promise and build the object returned.

Rather if you have a function within the service (angular JS factory) that resolves the promise to the object you expect, it is done once – and not in every controller.

3. Sub module level loaders

For large JS implementation (anything spans like 40 or more screens), first priority is to identify the modules and sub-modules. Loading of UI should be at the sub-module level. The seed projects we build should have sub-module level loaders. There are two implications if we do not do this correctly; first one is the – performance and the next one is the security. First one is the most obvious. Not so obvious is the security aspect. Let us understand (or review) SPA from a source loading perspective and then the security aspect

- JS Source in the browser can be seen by all

- Majority of the applications have role based ACL needs.

Loading more than necessary screens, forces the UI developer to do entitlement checks** in the UI and show/hide the screen (or part of the screen) to the viewer. With the entire source being loaded to the browser, it takes no more than few minutes to debug and understand the checks done, based on entitlements.

Breaking up the modules to levels, according to the ACL is a necessity. Discussing security in SPA is a separate topic on its own. To leave you here, the only safe mechanism is to secure the REST (backend) services with appropriate ACLs is mandatory.

4. Code Comments

As lots of Java/JEE or similar developers moving to JS, their view thus for has been – “JS is a scripting stuff and only few things are done on it; so comments are not that necessary”. JS is not type safe like C# or Java. Hence without comments (and proper usage conventions defined as standards for a project) understanding bunch of JS files is next to unfeasible. Provide comments at a folder level at the least.

5. No $rootscope

Do not pollute the global space in JS. Have name spaces that holds the variables. Similar to the global variables $rootscope in AngularJS is the top most scope defined for that application. Ensuring variables (ui fields) used are in proper namespace (like addressing person.savingsaccount.balance) makes it easier to understand and helps avoid un-warranted collisions (bugs thereby)

6. Entitlements in Browser

With source being loaded and readable in the browser, having the entitlements and checking them in the browser, enables the hacker to understand the internals of your system. Rather you can ask the backend to let the UI (SPA) application know, whether an action is permissible. Even while asking the session token that gets sent, should enable the backend system to determine the user and the role; based on which the access rights (entitlements) can be figured out.

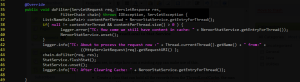

7. Not using Karma

Writing Jasmine or Mocha tests are mandatory; but without running then across the channels of consumption (web or mobile browsers) makes it useless. Running them via Jenkins/Grunt scripts is one option but Karma provides instantaneous feedback, by running the configured test cases against the configured browsers in real-time. Not using Karma will impact the timeline, as the bugs will be found at a later point – either during hourly or overnight tests or at QA time. With every minute the function or controller or service that is buggy, could be called by other layers – resulting in various other issues. Debugging, resolution time spent on the unwanted ones, is a criminal waste (in my view).

8. jQuery based Event handling

Folks never seem to move from jQuery. Even after getting JS MV* frameworks like AngularJS, they continue to do UI event handling with jQuery. A double whammy here – one you are introducing unwanted depdency on jQuery and you are not understanding the features of AnguarJS for example. Avoid jQuery completely.

9. Controller bloat & DOM Manipulation

Services in AngularJS does the brunt work; and controllers are like managers – should make sure the events / exceptions get handled in the correct way and proper UI navigation take place. Beyond that they should NOT try doing more. If they stick to the above, they by defacto will stay smaller.

Folks typically complain that the number of UI fields I have is way too high (80 for example, in one of the projects I worked), there is no way, I can reduce the size of the controller. For those, just go and check out the OOAD principle of encapsulation. Controller do not need to know the details of the ValueModel. They are there to pass it to the view; views place the appropriate attributes in appropriate places. With two-way data binding in AngularJS, there is no need to fetch the UI values and setting them up in the models.

Second thing is the DOM manipulation; avoid manipulating the UI. There are tons of articles that talk about UI event handling capability of AngularJS.

10. Local NPM

With so much change happening in the open source (particularly JS) frameworks, it justifies to have a local NPM that has your approved versions of the framework. Each library or framework in local NPM should have gone through the license checks necessary; as we don’t want a developer casually adding a third party internet available library to his/her source which is not commercially free.

As I write this, there are more articles that seem necessary – one for sure, about the security of JS MV* applications.